Enterprise organization models for performance engineering

Last updated on

Wednesday

February

2026

How enterprises organize for performance engineering

Far from being a specialized task, performance engineering has become a strategic capability that impacts how you deliver, scale, and operate software. The way teams are structured around performance work has a direct effect on testing velocity, coverage, and confidence.

This guide breaks down the three dominant models for organizing performance engineering teams and how to know which one fits your organization.

TL;DR

Performance engineering is about how you structure teams and workflowss, not just about testing tools.This guide breaks down the three leading org models (centralized, embedded, hybrid), explains how to scale responsibly, and shows how to overcome common enterprise roadblocks.

Why performance engineering matters for enterprise applications

In high-scale environments, speed and reliability are non-negotiable. Users expect fast experiences. Internal teams rely on predictable systems. And leadership counts on stable delivery pipelines. Without performance engineering, every deployment becomes a gamble.

Performance problems don’t just show up in production; they grow there. Addressing them early, continuously, and across teams is the only sustainable way to scale.

That means performance work can’t live on the margins. It has to be built into development workflows, owned by teams, and supported by tooling that makes testing fast and repeatable.

Done right, performance engineering becomes a force multiplier: fewer incidents, faster releases, and more resilient systems.

Why structure matters

How you structure your teams defines how performance is owned, enforced, and evolved. The right model can help teams deliver faster with fewer regressions. The wrong one creates bottlenecks, tool sprawl, or blind spots.

There’s no perfect structure for every company. But understanding the tradeoffs will help you evolve your model intentionally as your organization grows.

Performance engineering maturity model

As organizations evolve, so do their approaches to performance engineering. Organizations often progress through these levels as their teams grow, delivery velocity increases, and the need for visibility and scalability becomes more urgent.

This maturity model outlines the typical stages, from early ad hoc testing to platform-enabled scale.

Org model 1: centralized center of excellence (CoE)

This model consolidates performance engineering into a single expert team that serves the broader organization. It’s often the first step for companies formalizing performance practices, especially in regulated or risk-averse sectors.

A centralized approach simplifies compliance and ensures consistency, but it can’t always keep pace with modern engineering velocity.

Strengths:

- Standardized tooling and processes

- Deep domain expertise concentrated in one place

- Easier to enforce SLAs and compliance

Limitations:

- Slower test cycles due to handoffs

- Becomes a bottleneck for product teams

- Limited visibility or ownership outside the CoE

Best fit for:

- Organizations with strict governance needs

- Early-stage companies new to performance testing

- Workflows where release cadence is slower and predictable

Org model 2: Embedded performance engineers

With this model, performance engineers are integrated directly into product or platform teams. They test what they build and iterate with the team, often as part of the same sprint cycle.

This accelerates feedback and makes performance a shared responsibility, but it introduces inconsistency unless backed by strong internal alignment.

Strengths:

- Fast feedback loops

- Performance built into team culture

- Tight alignment between dev and test priorities

Limitations:

- Inconsistent practices across teams

- Tool sprawl and duplicated effort

- Hard to scale or govern without central coordination

Best fit for:

- DevOps-first orgs with fast CI/CD cycles

- Teams with strong engineering maturity

- Use cases where ownership outweighs uniformity

Org model 3: Hybrid or platform-led model

The hybrid model blends central enablement with distributed execution. A platform team builds infrastructure, standards, and training. Product teams own day-to-day testing using shared tools.

This model supports autonomy without losing control—and it’s the most common pattern among enterprise teams scaling performance testing.

Strengths:

- Combines speed and consistency

- Enables scale across dozens of teams

- Encourages shared responsibility for performance

Limitations:

- Requires investment in tooling and enablement

- Demands strong collaboration and culture

- Needs clear boundaries between platform and product ownership

Best fit for:

- Enterprises supporting 10+ dev teams

- Orgs growing out of centralized bottlenecks

- Companies using a testing platform like Gatling to balance autonomy with governance

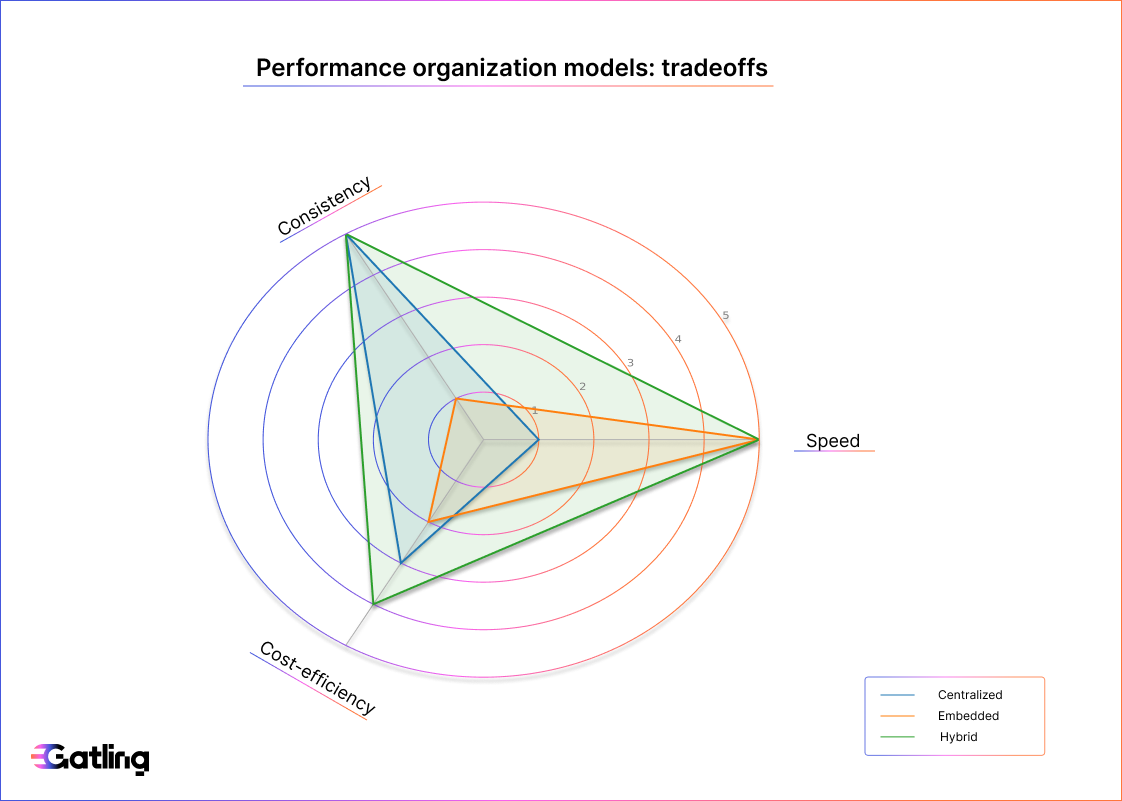

Visual: comparing performance organization models

When to evolve your model

No organization stays static. As teams grow, release cycles shorten, and platform complexity increases, your performance org model has to adapt. What worked when you had five dev teams won’t scale when you have fifty.

Understanding the signs of outgrowing your model is key to staying ahead of bottlenecks and blind spots.

Signs you’ve outgrown your model:

- Test requests are backlogged

- Teams are duplicating scripts or tooling

- No single source of truth for test scenarios or metrics

- Lack of trend visibility across teams or releases

How to overcome common enterprise challenges

Every org hits friction as they grow. Whether it’s duplicated effort or lack of ownership, these issues slow down releases and erode trust in performance data. Here’s how to address the most common ones.

No shared standards

Performance tests are only valuable if they’re repeatable and trusted. If every team writes tests differently—or not at all—it’s hard to compare results or track regressions.

Solution: Start with a CoE or platform team to define test naming conventions, metrics, thresholds, and success criteria. Enforce them with automation.

Tool fragmentation

One team uses custom scripts. Another imports from Postman. A third uses an off-the-shelf GUI. The result? Chaos.

Solution: Consolidate onto a single platform that supports diverse workflows but centralizes results, execution, and governance.

Resistance from development teams

When performance testing is a chore, or seen as “somebody else’s job,” adoption lags.

Solution: Integrate testing into existing CI/CD workflows. Make it invisible and automatic. Share early wins: regressions caught, crashes prevented, faster deployments.

Limited performance headcount

You can’t hire a performance engineer for every team. But you can scale their impact.

Solution: Invest in no-code and low-code tooling that empowers QA and devs to self-serve. Automate infrastructure provisioning and teardown. Create reusable templates.

Practical next steps

There’s no one-size-fits-all structure, but there are clear paths forward. Map your current state, align it with delivery goals, and make incremental changes that improve visibility, coverage, and team ownership.

- Assess your delivery model and scale of testing needs

- Start with shared tooling and evolve toward hybrid models

- Build platforms that help teams test without friction

- Define metrics that track both execution and impact

Want to see hybrid performance testing in action?

Gatling Enterprise Edition is built for organizations adopting a platform-led model. It combines code-based authoring with no-code orchestration, lets teams test early and often, and scales seamlessly across cloud and on-prem environments.

- Shift performance left and right with one unified platform

- Simulate millions of users with high-efficiency load generators

- Enable self-service across teams without sacrificing control

- Trigger tests from CI/CD, analyze trends, and version infrastructure alongside code

FAQ

FAQ

The performance engineering process is a continuous cycle of defining requirements, modeling workloads, creating and executing tests, analyzing results, and feeding insights back into development to improve application performance throughout the software lifecycle.

A successful performance engineering team combines deep technical expertise with strong collaboration skills, operates with standardized tooling and processes, integrates seamlessly into CI/CD workflows, and maintains visibility across development, QA, and operations functions.

A performance engineer specializes in load testing, capacity planning, and optimization, focusing specifically on how systems behave under stress, while a QA engineer typically covers broader functional testing with performance as one component of their responsibilities.

Enterprise teams typically use load testing platforms that support test creation, automation, distributed infrastructure, analytics, and integrations with CI/CD pipelines and observability tools to embed performance validation into their delivery workflows.

Related articles

Ready to move beyond local tests?

Start building a performance strategy that scales with your business.

Need technical references and tutorials?

Minimal features, for local use only