Performance testing maturity: A comprehensive guide

Last updated on

Tuesday

November

2025

Performance testing maturity

When Amazon's Prime Day experiences slowdowns, they lose $1.6 billion annually for every 100ms of delay. When banking applications crash during peak hours, the consequences ripple far beyond technical teams into regulatory compliance, customer churn, and damaged reputation.

Yet despite these high stakes, most organizations approach performance testing with ad hoc methods and reactive measures that leave critical systems vulnerable to failure.

The reality is that most organizations still operate at the initial maturity level of performance testing, relying on sporadic testing driven by urgent issues rather than systematic testing processes.

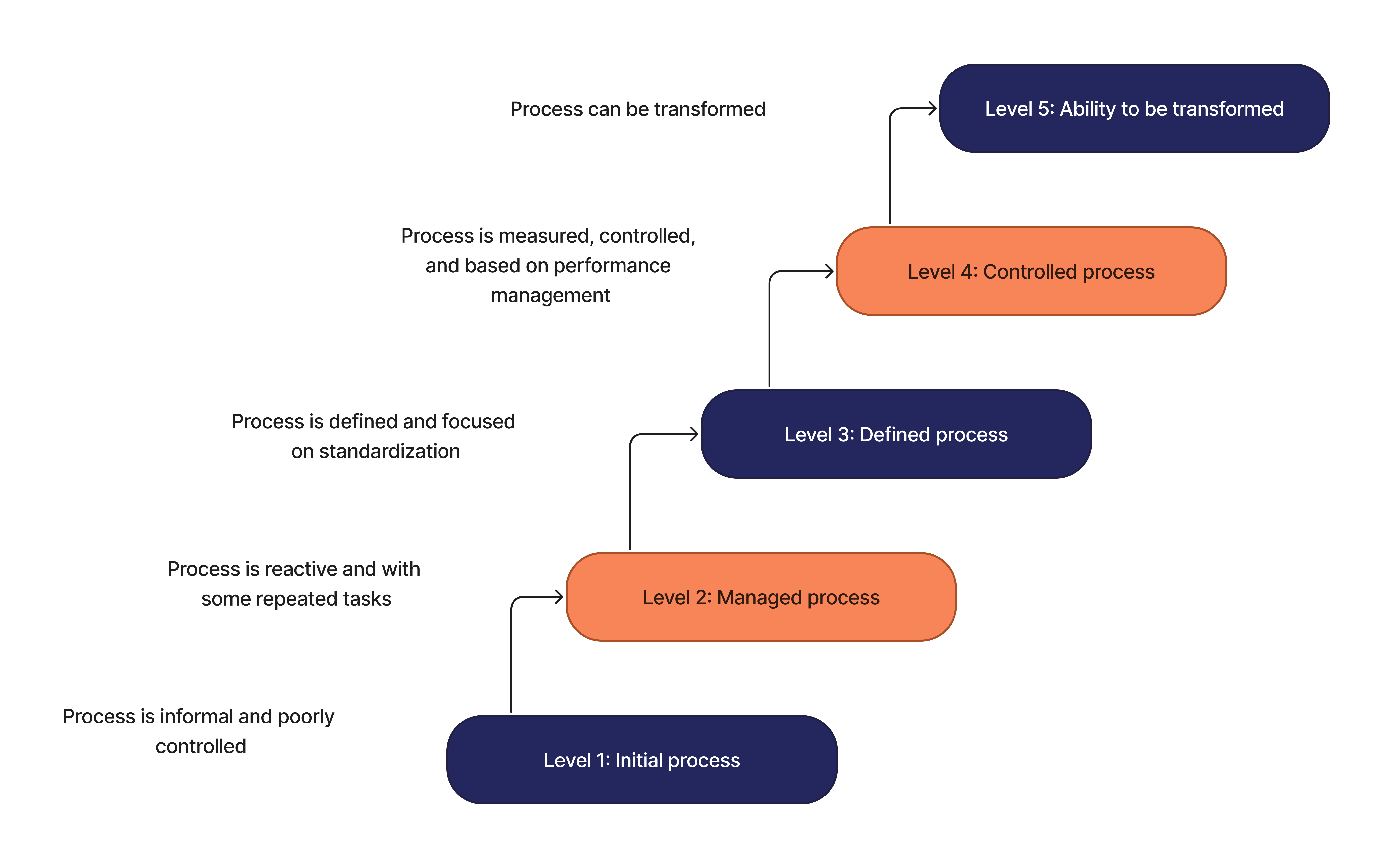

Understanding performance testing maturity models

Performance testing maturity models serve as strategic roadmaps for organizations seeking to evaluate, improve, and optimize their testing practices.

These maturity models provide clear benchmarks that help teams identify their current capabilities, set realistic goals, and plan actionable steps for process improvement.

Organizations with mature testing practices achieve measurable improvements in system reliability, faster deployment cycles, and superior user experiences.

Research from IBM and industry leaders demonstrates that companies advancing through structured maturity levels achieve better testing effectiveness, faster issue resolution, and overall improved performance management.

The cost of poor performance:

- Average IT downtime: $5,600 per minute

- Performance issues increase customer churn by 25%

- Production problems take 5x longer to resolve than issues caught early

The foundation: Capability maturity model integration

These testing maturity frameworks draw their strength from the broader Capability Maturity Model Integration (CMMI) that has guided software development practices for decades.

The capability maturity model provides a structured approach to process improvement that has been successfully adapted beyond software development into performance testing and quality engineering.

Cross-industry research validates that organizations using formal maturity assessment approaches achieve better outcomes in:

- Process standardization: Consistent testing procedures across teams

- Knowledge transfer: Reduced dependency on individual expertise

- Continuous testing integration: Seamless incorporation into development workflows

- Performance measurement: Data-driven decision making

Key benefits of structured maturity models

Organizations implementing formal testing maturity models report significant improvements in cross-team collaboration effectiveness.

These frameworks create common language and shared understanding across development, operations, and business teams about what effective performance testing looks like at each maturity level.

The systematic approach ensures that performance testing improvements align with overall software testing quality initiatives rather than creating isolated pockets of excellence that don't integrate with broader organizational goals.

The four stages of performance testing maturity

Think of testing maturity like learning to drive. Most organizations start by swerving to avoid crashes, then learn basic rules, eventually become skilled drivers, and finally some become professional racers. Here's how to navigate each maturity level:

Stage 1: Initial/ad hoc testing (where most organizations start)

You're here if performance problems surprise you. Testing occurs sporadically, often just before major releases or after problems have already impacted users. About 70% of organizations operate at this initial maturity level.

What it looks like:

- Testing happens reactively after user complaints

- No documented testing processes or procedures

- Inconsistent test results between runs

- Knowledge resides in individual team members

- Testing environments don't reflect production conditions

Common challenges:

- Limited tool expertise across the team

- Unclear performance requirements and acceptance criteria

- Lack of stakeholder buy-in for systematic testing strategy

- Difficulty reproducing performance issues consistently

- Manual testing processes that don't scale

Industry impact: Organizations at this stage experience significantly more production performance incidents than those at higher maturity levels. Research from software quality institutes shows these companies face higher customer churn rates following performance problems.

Moving forward:

- Establish basic performance requirements and thresholds

- Document current testing procedures (even if informal)

- Schedule regular testing cycles before major releases

- Select consistent performance testing tools

- Create reproducible testing environments

Stage 2: Defined/repeatable testing (building your foundation)

You've moved beyond firefighting and created structured, repeatable testing processes that teams can follow consistently.

Organizations have adopted purpose-built performance testing tools and established documented procedures for common testing scenarios.

What it looks like:

- Structured test strategies, test plans, and documented test cases

- Consistent tool usage across testing teams

- Reproducible test results for known scenarios

- Basic performance baselines established

- Test execution integrated into release processes

Key capabilities developed:

- Process documentation: Standardized testing procedures and workflows

- Tool standardization: Strategic selection of performance testing solutions

- Environment consistency: Reliable test environments that mirror production

- Requirements clarity: Specific performance targets and acceptance criteria

Limitations at this stage: While teams have reliable processes for known scenarios, they may struggle with edge cases, complex user journeys, or newly introduced features. Test coverage remains partial, focusing primarily on happy path scenarios.

Advancement indicators:

- Test strategies align with business requirements

- Testing procedures integrated with software development lifecycle

- Consistent results across different team members

- Historical performance data collection begins

Stage 3: Managed and measured testing (performance as part of development)

Performance testing becomes automatic, data-driven, and deeply integrated into the software development lifecycle.

Tests are no longer afterthoughts but planned, executed, and analyzed as integral parts of the development process.

What it looks like:

- Comprehensive test automation integrated into CI/CD pipelines

- Performance testing occurs during development, not just before release

- Robust performance metrics collection and trend analysis

- Cross-team collaboration on performance requirements

- Proactive identification of performance bottlenecks

Advanced capabilities:

- Automated test execution: Tests run without manual intervention

- Performance measurement: Systematic tracking of key performance indicators

- Trend analysis: Historical performance data drives optimization decisions

- Risk management: Testing procedures manage performance risks systematically

Integration achievements:

- Testing processes fully integrated with development workflows

- Monitoring and observability correlate test results with production metrics

- Capacity planning capabilities for peak events and traffic spikes

- Automated alerting on performance regressions

Stage 4: Optimized/continuous testing (performance-first culture)

The pinnacle of testing maturity where performance becomes proactive, automated, and designed into systems from the ground up.

Organizations don't just test for performance; they build performance into their development culture.

What it looks like:

- Predictive analysis for capacity planning and performance forecasting

- Performance designed into new features from conception

- Innovation and experimentation in testing approaches

- Continuous performance monitoring and optimization

- Knowledge sharing and industry contribution

Advanced practices:

- Shift-left testing: Performance considerations during system design

- Continuous testing: Automated performance validation with every code change

- Predictive analytics: Forecasting performance issues before they occur

- Process improvement: Regular innovation in testing methodologies

Organizational transformation:

- Performance-first mindset across all development teams

- Seamless collaboration between development, testing, and operations

- Regular exploration of new testing tools and methodologies

- Contribution to industry knowledge and best practices

Competitive advantages:

- Faster feature delivery without performance compromise

- Superior user experience and system reliability

- Efficient resource utilization and cost optimization

- Industry leadership in performance engineering practices

When do you need performance testing?

Understanding the specific triggers and risk factors that necessitate performance testing helps organizations prioritize their testing efforts and allocate resources effectively.

Critical testing triggers and scenarios

Major releases and infrastructure changes represent high-risk events requiring comprehensive performance validation:

- Significant feature deployments that alter user interaction patterns

- Server migrations, database upgrades, or architectural modifications

- Third-party integration changes affecting system dependencies

- Security updates or patches that may impact system performance

High-traffic event preparation demands proactive load testing to ensure system resilience:

- Seasonal sales events (Black Friday, holiday promotions, end-of-quarter pushes)

- Product launches and marketing campaign launches

- Industry conferences, media appearances, or promotional activities

- Regulatory deadlines driving concentrated user activity

Performance issue investigation often requires controlled testing to isolate problems:

- User-reported slowdowns, timeouts, or system unavailability

- Intermittent performance degradation difficult to reproduce in production

- Capacity planning for anticipated business growth or user base expansion

- Competitive analysis requiring performance benchmarking

Risk assessment framework for testing prioritization

Business impact evaluation helps organizations focus testing efforts on systems with highest potential consequences:

- Revenue-generating systems and customer-facing applications

- Regulatory compliance systems with legal or financial implications

- Customer satisfaction drivers affecting retention and brand reputation

- Operational efficiency systems supporting internal productivity

Technical risk identification considers system complexity and change history:

- Complex system architectures with multiple integration dependencies

- Components with frequent code changes or recent major modifications

- Historical performance problem areas requiring ongoing monitoring

- Legacy systems with limited documentation or understanding

Resource allocation considerations balance testing thoroughness with practical constraints:

- Available testing time within development and deployment cycles

- Team expertise and tool availability for comprehensive testing

- Budget constraints affecting testing scope and tool selection

- Risk tolerance levels based on system criticality and business impact

Load testing readiness framework

Ready to implement systematic load testing? This section provides a structured approach to establishing robust load testing capabilities that align with your testing maturity goals.

Defining clear objectives and scope

Successful load testing begins with crystal-clear objectives that connect technical testing goals to measurable business outcomes.

Organizations must articulate specific reasons for testing—whether verifying new feature scalability, preparing for anticipated traffic spikes, or investigating reported performance issues.

Objective clarity requirements:

- Quantitative performance targets (response times, throughput, error thresholds)

- Business-critical user flows and system components

- Acceptable performance degradation under peak load conditions

- Success criteria tied to user experience and business metrics

Scope definition essentials: Research shows that focusing testing efforts on business-critical user journeys provides maximum coverage of potential business impact. Teams should identify and prioritize:

- Core user interactions that drive revenue and engagement

- System integration points most vulnerable to performance degradation

- Peak usage scenarios based on historical data analysis

- Regulatory or compliance requirements for system availability

Comprehensive use case identification and load estimation

Business-critical user flows represent the foundation of effective load testing strategy.

Teams must systematically identify the user journeys that drive business value—authentication processes, search functionality, transaction workflows, and core interactions that directly impact revenue and customer satisfaction.

Historical data analysis approach: Analytics data provides the foundation for realistic load estimation and performance testing scenarios:

- Traffic pattern analysis including daily, weekly, and seasonal variations

- User behavior patterns and typical session characteristics

- Peak demand identification for predictable events (sales, launches, campaigns)

- Growth trend analysis for capacity planning and scalability testing

Load projection methodology: Effective load estimation requires consideration of both predictable and unpredictable traffic spikes:

- Predictable peaks: Seasonal sales events, product launches, marketing campaigns

- Unpredictable spikes: Viral social media mentions, breaking news events, competitive actions

- Growth scenarios: Planned business expansion and user base growth

- Stress testing: System behavior beyond expected peak capacity

Production environment preparation and fidelity

Test environment fidelity directly correlates with the accuracy of performance testing results.

Production environment mirroring represents one of the most critical aspects of load testing readiness, requiring close replication of hardware specifications, network configurations, software versions, and data volumes.

Infrastructure considerations:

- Hardware specifications: CPU, memory, storage that match production scaling

- Network configuration: Bandwidth limitations, latency characteristics, load balancer setup

- Software versions: Exact matching of application stacks, databases, and dependencies

- Data volumes: Production-scale datasets that reveal realistic performance characteristics

Environmental validation: Organizations with high-fidelity test environments achieve significantly higher correlation between test results and production performance:

- Database query performance under realistic data loads

- Caching effectiveness with production-scale content volumes

- Memory usage patterns that reflect actual user data scenarios

- Network latency and bandwidth constraints matching production conditions

Strategic tool selection and test script development

Tool evaluation requires careful consideration of technology stack compatibility, scalability requirements, team expertise, and long-term maintenance overhead.

The testing strategy should align tool selection with organizational maturity goals and technical capabilities.

Tool evaluation criteria:

- Technology compatibility: Integration with existing development and deployment tools

- Scalability capabilities: Ability to simulate realistic user loads and growth scenarios

- Team expertise alignment: Learning curve and skill requirements matching team capabilities

- Maintenance requirements: Long-term script maintenance and updates overhead

Realistic script development: Performance test scripts should accurately simulate real user behavior patterns, going beyond simple HTTP requests to include:

- Complex user journeys: Multi-step workflows reflecting actual user navigation patterns

- Think times and pacing: Realistic delays between user actions based on behavior analytics

- Data parameterization: Variable input data preventing cache optimization skewing

- Error handling: Realistic response to system errors and edge cases

Step-by-step load testing implementation

This section provides a comprehensive implementation guide that transforms load testing from ad hoc activities into systematic testing processes aligned with performance testing maturity goals.

.png)

Phase 1: Requirements analysis and test strategy development

Comprehensive requirement analysis forms the foundation of successful load testing implementation.

Teams must thoroughly understand both functional requirements (what the system must accomplish) and non-functional requirements (performance characteristics and quality attributes).

Requirements gathering process:

- Business requirement analysis: User expectations, regulatory compliance, competitive benchmarks

- Technical requirement assessment: System architecture constraints, integration dependencies

- Performance criteria definition: Response time targets, throughput expectations, error thresholds

- Success metrics establishment: Measurable criteria for test completion and system acceptance

Test strategy documentation:

- Testing scope and objectives aligned with business priorities

- Performance testing approach and methodology selection

- Resource allocation and timeline planning

- Risk assessment and mitigation strategies

Phase 2: Test script creation and validation

Test script development requires systematic attention to realistic user journey mapping and comprehensive scenario coverage.

Scripts should accurately represent how users navigate applications, including timing variations, input data diversity, and error condition handling.

Script development best practices:

- User journey mapping: Comprehensive workflows reflecting actual user behavior patterns

- Data parameterization: Variable inputs preventing unrealistic caching and optimization

- Error scenario inclusion: Handling both successful transactions and failure conditions

- Maintainability design: Modular, reusable scripts reducing long-term maintenance overhead

Validation and verification: Before executing full-scale load testing, validate scripts through:

- Small-scale test runs confirming script functionality

- Performance baseline establishment with single-user scenarios

- Error handling verification under various system conditions

- Script maintenance procedures and update workflows

Phase 3: Systematic test execution and monitoring

Test execution should follow incremental load scaling approaches that reveal how system bottlenecks emerge at different user load levels.

This systematic approach helps teams understand performance degradation patterns and identify specific breaking points.

Execution methodology:

- Incremental load scaling: Gradual user load increases revealing performance thresholds

- Sustained load periods: Extended testing duration revealing memory leaks and degradation

- Peak load simulation: Maximum expected traffic scenarios and stress testing

- Recovery testing: System behavior after peak load periods and failure scenarios

Comprehensive monitoring approach:

- Application metrics: Response times, error rates, transaction completion rates

- Infrastructure monitoring: CPU utilization, memory usage, disk I/O, network bandwidth

- Database performance: Query execution times, connection pool utilization, lock contention

- User experience simulation: End-to-end transaction timing from user perspective

Phase 4: Results analysis and actionable recommendations

Systematic analysis approaches reduce issue diagnosis time and improve solution accuracy.

Teams should establish clear procedures for gathering performance data, identifying patterns, and correlating metrics to understand root causes.

Analysis framework:

- Baseline comparison: Performance changes relative to established benchmarks

- Bottleneck identification: Specific system components causing performance degradation

- Root cause analysis: Systematic investigation of underlying performance issues

- Impact assessment: Business consequences of identified performance problems

Recommendation development:

- Technical solutions: Specific code, configuration, or infrastructure changes

- Process improvements: Testing procedure enhancements for future cycles

- Capacity planning: Infrastructure scaling recommendations based on test results

- Monitoring enhancements: Production monitoring improvements for ongoing performance management

Moving through the maturity stages

This section provides specific strategies for organizations to systematically advance through performance testing maturity levels while avoiding common pitfalls and maximizing return on improvement investments.

Stage 1 to stage 2: Establishing foundation processes

The transition from initial to defined testing maturity requires fundamental changes in organizational approach to performance testing. Process documentation becomes the cornerstone for this advancement.

- Documentation requirements: Organizations investing in comprehensive process documentation achieve faster knowledge transfer and more consistent testing outcomes across different team members and projects.

- Tool adoption strategy: Rather than implementing the most advanced tools immediately, focus on solutions that teams can successfully adopt and use effectively. Staged tool implementation approaches result in higher success rates and lower training costs.

Stage 2 to stage 3: Integration and automation advancement

The progression from defined to managed testing maturity centers on seamless integration with continuous integration and deployment pipelines, transforming performance testing from separate activities into integral development process components.

- Cross-functional team alignment on performance requirements and acceptance criteria

- Shared responsibility models distributing performance testing across development teams

- Regular performance review cycles integrating business and technical stakeholders

- Knowledge sharing sessions transferring expertise across organizational boundaries

Stage 3 to stage 4: Optimization and continuous improvement

Advancement from managed to optimized testing maturity requires sophisticated analytical capabilities and comprehensive feedback loops connecting testing activities with business outcomes.

Cultural transformation indicators:

- Performance-first mindset embedded in development practices

- Proactive capacity planning based on business growth projections

- Regular contribution to industry knowledge and best practices

- Mentorship and knowledge transfer to other organizations and teams

Load testing maturity best practices

Reaching higher maturity levels is only half the battle—maintaining and continuously improving those capabilities is where many organizations struggle.

These proven practices help you sustain progress, avoid regression, and build testing maturity that evolves with your business needs.

Strategic organizational alignment

Business goal integration ensures performance testing efforts support broader organizational objectives rather than existing as isolated technical activities:

- Regular evaluation of testing contribution to user experience improvements

- ROI measurement connecting testing investments to business outcomes

- Stakeholder communication demonstrating testing value and impact

- Performance requirements alignment with competitive positioning and market demands

Risk-based prioritization focuses testing resources on components and scenarios posing greatest threats to business success:

- Formal risk assessment frameworks evaluating business impact potential

- Testing effort allocation based on system criticality and failure consequences

- Regular re-evaluation of risk priorities as business priorities evolve

- Cost-benefit analysis ensuring appropriate return on testing investments

Implementation excellence and process maturity

Early software development lifecycle integration prevents performance testing from becoming deployment bottlenecks:

- Performance requirement definition during system design phases

- Architecture review processes considering performance implications

- Code review integration identifying potential performance issues

- Continuous feedback loops connecting development decisions with performance outcomes

Knowledge management and transfer systems ensure testing expertise distribution across organizational boundaries:

- Regular training programs building performance testing capabilities

- Documentation standards maintaining institutional knowledge

- Cross-team collaboration reducing individual expertise dependencies

- Succession planning ensuring continuity of testing capabilities

Data-driven continuous improvement methodology

Metrics-based decision making relies on quantitative data rather than intuition for testing process improvements:

- Key performance indicator establishment correlating with business outcomes

- Regular metrics review cycles identifying improvement opportunities

- Benchmarking against industry standards and competitor performance

- Data visualization enabling stakeholder understanding and buy-in

Automation strategy development reduces manual overhead while improving consistency:

- Strategic automation roadmaps balancing effort investment with outcome improvements

- Tool selection criteria emphasizing long-term maintainability and scalability

- Training programs building automation expertise across testing teams

- Regular automation effectiveness evaluation and optimization

The path toward testing maturity

The transformation from reactive, ad hoc performance testing to optimized, continuous testing practices constitutes a fundamental organizational evolution toward performance-first culture and systematic quality engineering.

Organizations with mature testing practices report:

- System reliability improvements: Significant reductions in production incidents and faster issue resolution

- User experience enhancement: Improved customer satisfaction and reduced churn rates

- Operational efficiency gains: Faster deployment cycles and reduced firefighting overhead

- Cost optimization: More efficient resource utilization and capacity planning accuracy

The path toward performance testing maturity requires sustained organizational commitment, appropriate resource allocation, and systematic execution patience.

However, the destination delivers substantial returns in system reliability, competitive positioning, user satisfaction, and business success.

FAQ

FAQ

Related articles

Ready to move beyond local tests?

Start building a performance strategy that scales with your business.

Need technical references and tutorials?

Minimal features, for local use only