Five best practices to optimize your load testing

Whatever stage you are at with the load testing of your web application, being aware of best practices in the industry can significantly enhance your testing efforts. Here are a few of the best practices to adopt that will significantly improve the quality and accuracy of your load-testing exercise.

Ensure your user journey is accurate

This point cannot be taken lightly. Most systems and applications are stateful. For example, you can’t remove items from a shopping cart without registering an account and logging into the system. Your tests need to model these user journeys accurately and appropriately. They must reflect a realistic use of your website.

Does your system follow an open or closed model? At what rate do users typically arrive at the system? When the users arrive, how long do they usually stay, and what transactions do they typically perform? All of these questions are important to answer accurately in order to create a realistic user journey and load test.

Getting this part wrong can mean you are not accurately measuring the substantial usage of the live system, and your test results will, therefore, be inaccurate and of less value.

Use dynamic test data

Most systems will have caches that store data being used repetitively. If your load test logs in with the same user to the same area of the system, this will not make for a realistic real-world simulation. Ensure you use varied and dynamic test data to target the application itself, not just the caches.

To construct this data, you may need to create datafiles to hold the parameters you will subsequently pass to the test, such as usernames and passwords. Depending on the type of test data required, there is potential that this can also be generated dynamically at runtime by the load testing tool.

It may also be necessary to populate a database with test data before each load test run to ensure an accurate and realistic test.

Involve a wide range of stakeholders

Load testing should be everyone's responsibility in the system. Business and analytics-focused people will typically have access to information not predominantly available to the development team. This information should be leveraged to craft accurate load scenarios.

It is both unfair and unrealistic to expect the software developers and testers in the team to have an accurate understanding of how the end-users will use the system. Additionally, developers will often have an idea in their head of how they “think” the user will interact with the system that is entirely different from reality. Involving a broad range of stakeholders across the business will help to mitigate this.

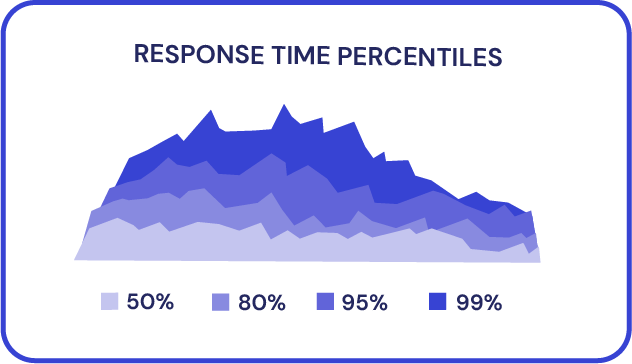

Focus on response time percentiles, not averages

Measuring a system’s average response time is an insufficient metric to use in determining performance. A handful of outlying times can significantly skew the data, making system performance seem far better (or worse) than reality.

A superior metric to use is the nth percentile, such as the 90th percentile. This metric can be read as “90% of responses from the system were of this time or less”. Percentile metrics have the added benefit of being more relatable to business stakeholders for reporting purposes.

An average response time still has a place in the load test report since all data relating to system performance is valuable. But it must be used with an awe of caution and not relied predominantly upon as the fundamental measure of the application’s performance.

Make performance testing part of the agile development process

It can be tempting to wait until the end of the project to start performance testing, as, at this point, you may be able to build out a test environment with an application that is close to the same level as the one to be used in production. However, waiting until this point of the project lifecycle to begin performance testing can prove to be a pricey mistake.

It can be tempting to wait until the end of the project to start performance testing, as, at this point, you may be able to build out a test environment with an application that is close to the same level as the one to be used in production. However, waiting until this point of the project lifecycle to begin performance testing can prove to be a pricey mistake.

Assessing the performance of your system only at the end of the project makes it difficult, costly, and sometimes impossible to fix issues. A far better approach is incorporating performance testing early in your project as part of your continuous integration build. Remember to identify and measure against clearly defined criteria on what is and isn’t an acceptable level of performance. Test early, test often.

Share this

You May Also Like

These Related Articles

Increase Efficiency In Your CI/CD Pipeline With Gatling Enterprise Cloud

What's the best solution to integrate load testing to a CI/CD pipeline?