Step-by-step: Easily deploy and test your AI application using Gatling and Koyeb

Last updated on

Wednesday

October

2025

Step-by-step: Easily deploy and test your AI application using Gatling and Koyeb

The explosive growth of AI applications over the past year has transformed the software landscape, with companies rapidly deploying AI-powered services across all industries. As these AI applications move from experimentation to production environments, robust testing and deployment strategies have become critical. To address this need, we hosted a webinar with Yann Leger from Koyeb.

Koyeb is a serverless platform that enables fast deployment of applications, APIs, and databases in high-performance microVMs worldwide. The platform is language-agnostic and supports various deployment methods (Git, Dockerfile, container registries), while providing enterprise-grade features including global load balancing across 255+ edge locations, multi-region deployment across 6 locations, automatic scaling, and many more.

In this article, we'll give you a step-by-step guide on how to use Koyeb and Gatling to easily deploy and load test your AI applications, ensuring they perform optimally under real-world conditions. You can also access the Replay of the Webinar we did on this topic.

Easily deploy and test your AI application

Webinar

Easily deploy and test your AI application

Why should I load test AI applications?

With more and more AI applications being deployed, load testing has become increasingly important because of:

- Resource-Intensive Operations: AI models require significant computational resources. Load testing helps ensure your infrastructure can handle multiple concurrent requests without degrading performance or experiencing failures.

- Memory Management: AI models can consume substantial memory, and improper handling of multiple requests could lead to memory leaks or resource exhaustion. Load testing helps identify performance issues under real world usage.

- Integration Performance: AI applications often integrate with multiple services (vector databases, model providers, preprocessing pipelines). Load testing reveals how your full architecture perform under stress and helps identify potential bottlenecks in the overall system architecture.

Benefits of using Koyeb & Gatling

The integration of Gatling and Koyeb offers a powerful toolkit for deploying and load testing AI applications. This approach provides several key benefits:

- Realistic Testing Environment: Koyeb enables you to deploy applications in a cloud-native environment, making it easy to create realistic, scalable test setups that closely mimic production.

- CI/CD Integration: As demonstrated, this setup integrates seamlessly into your CI/CD pipeline, enabling automated performance testing with every code change.

- Comprehensive Reporting: Gatling's detailed reports offer valuable insights into your application's performance under various load conditions

- Low Deployment effort : Koyeb’s platform allows you to deploy your AI applications effortlessly ( one click App,…) , reducing operational overhead while ensuring consistent performance across various environments.

Step-by-step guide to load test your AI apps

1/ Setting Up the Project

First, ensure you have Gatling installed and set up. If you haven't already, download it from the official documentation. Next, create an account on the Koyeb platform and on the Qdrant platform (the vector database we used for this webinar).

You can now clone the project from GitHub, open the webinars/Koyeb folder and upload the snapshotqdrant.snapshot file found in the repository on Qdrant Cloud, if you need guidance, please refer to their documentation.

2/ Deploying the application

Ollama

To deploy Ollama on Koyeb, we will use their one-click app offering. Go to the Ollama one-click page and click on the button labeled "Deploy". You will be redirected to a Koyeb page with everything configured for your image. Simply click on deploy and save the public URL that Koyeb provides you.

After that, we need to install our Phi-3 model on our instance. To do this, simply use:

Now that our Ollama is set up, we will set up the machine for our application.

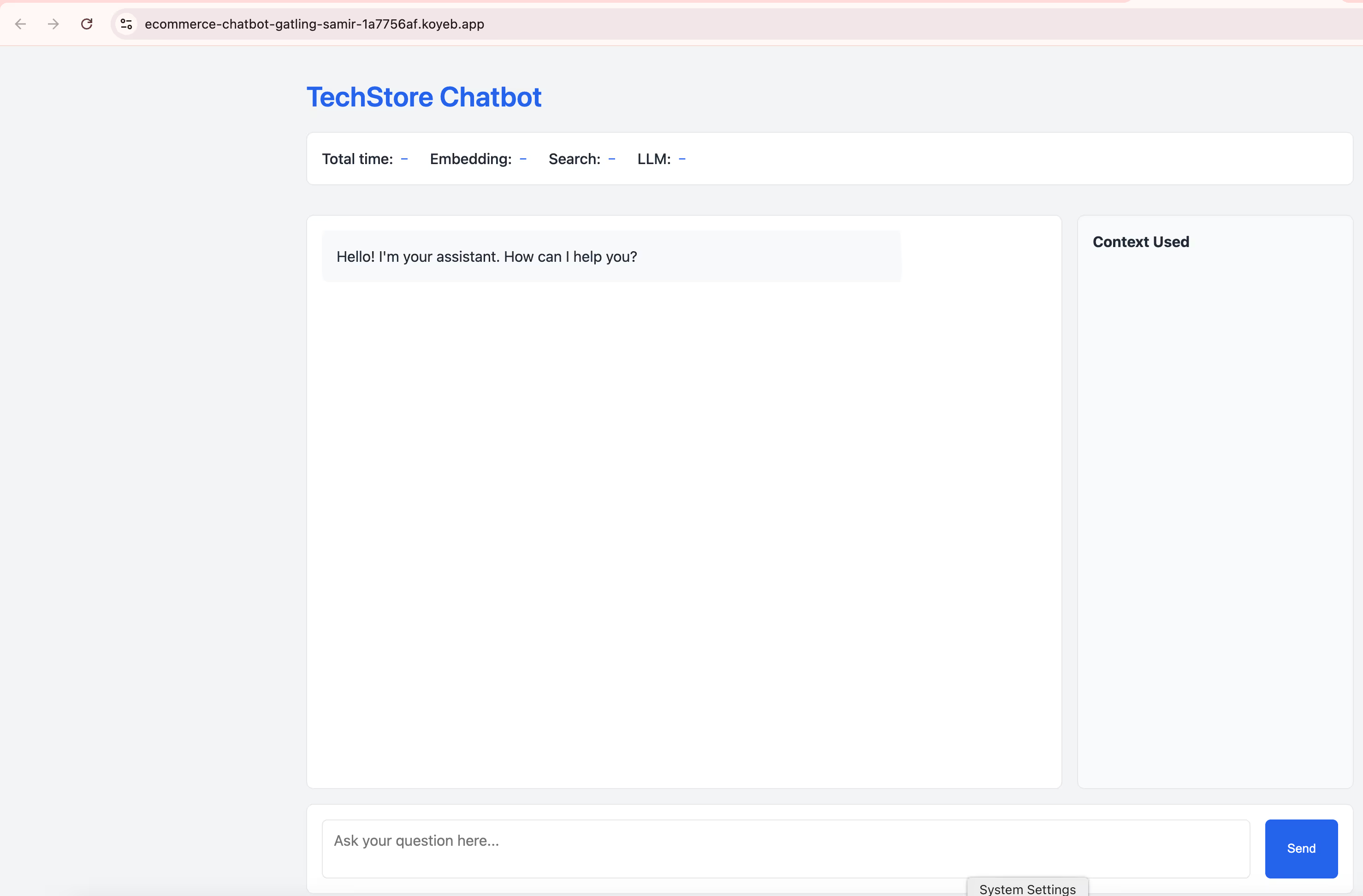

AI Chatbot

To install your application whenever we have an update, we will use Koyeb GitHub Actions deploy. In our case, here are the specifications we need:

- Four secrets: Ollama and Qdrant URLs, Qdrant API Token, and Koyeb API Token

- A small machine with Docker Runtime

First, you will need to create API tokens for Qdrant and Koyeb - please refer to their documentation for instructions. Then add these tokens as secrets in your GitHub repository configuration. Once this is done, you will be all set up to launch this workflow.

This workflow sets up the Koyeb CLI, adds our secrets to Koyeb, and finally builds our application with our environment variables on a small Koyeb machine using our Dockerfile.

At the end of the workflow, you can go to Koyeb where you will see a newly created application. Don't forget to copy the URL.

3/ Launching the Gatling Simulation Locally

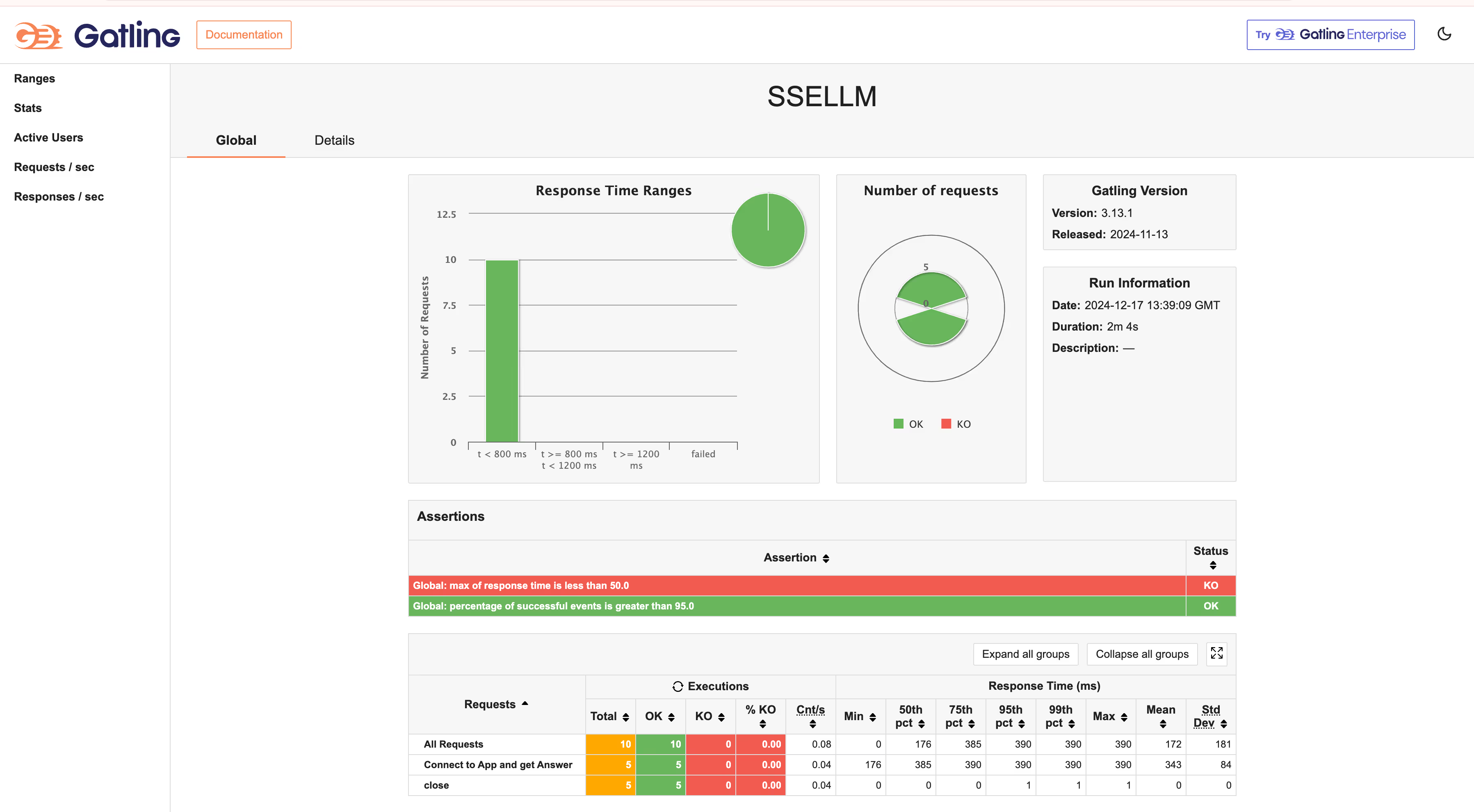

In this repository, we also have inside the gatling folder a Gatling simulation that allows us to load test our application. This simulation tests our website by calling the chat endpoint of our API. It uses a CSV feeder to provide test data and sets up an HTTP protocol with a base URL also after we connect to our endpoint using Server sent event (SSE).

The test injects a burst of 5 users and the simulation includes assertions to ensure that the response times are under 50 milliseconds and that the success rate is above 95%.

To launch the load test, open a terminal and run (The best practice is to run Gatling on another machine from the API):

🚧 Don’t forget to change the URL in the HTTP protocol 🚧

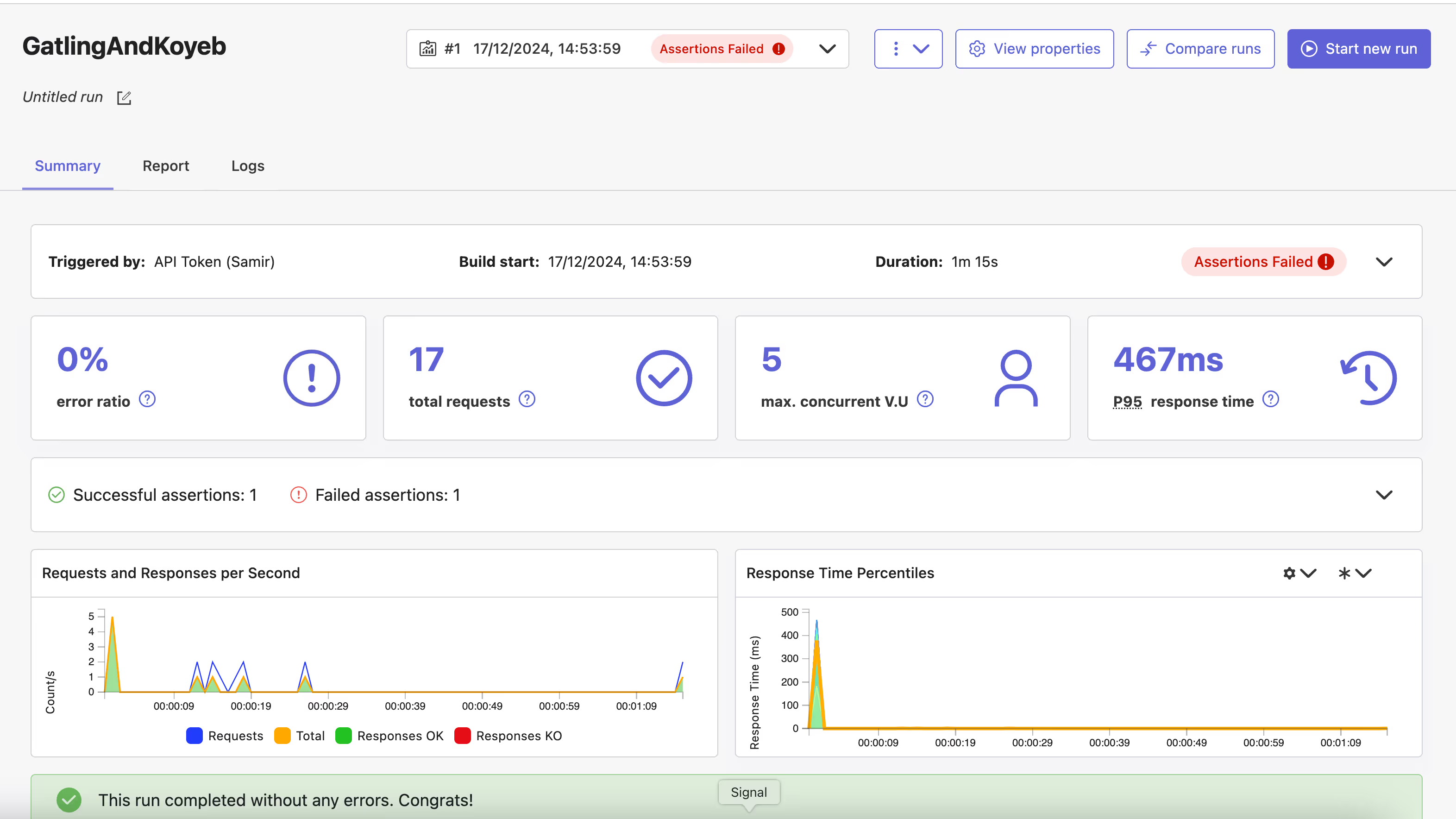

After the simulation is complete, Gatling generates an HTML link in the terminal To access your report. Review metrics such as response times, successful and failed connections, and other indicators to identify potential issues with your service. For instance, we observe that one of our assertions fail.

4/ Launching the Gatling Simulation in CI/CD

We've explored the local load testing of your application using Gatling open-source. However, each developer commit triggers a CI/CD pipeline to test and deploy the application in an enterprise workflow.

Gatling Enterprise integrates seamlessly with GitHub Actions, GitLab, Buildkite, and other CI/CD tools.

Let's set it up with GitHub Actions and Gatling Enterprise.

Prerequisites

You'll need to create an API key with configure permission on Gatling Enterprise. Refer to our documentation for instructions. Also, add the GitHub action to your repository and set up the secret.

You can also check the GitHub Actions documentation to see the possible customizations.

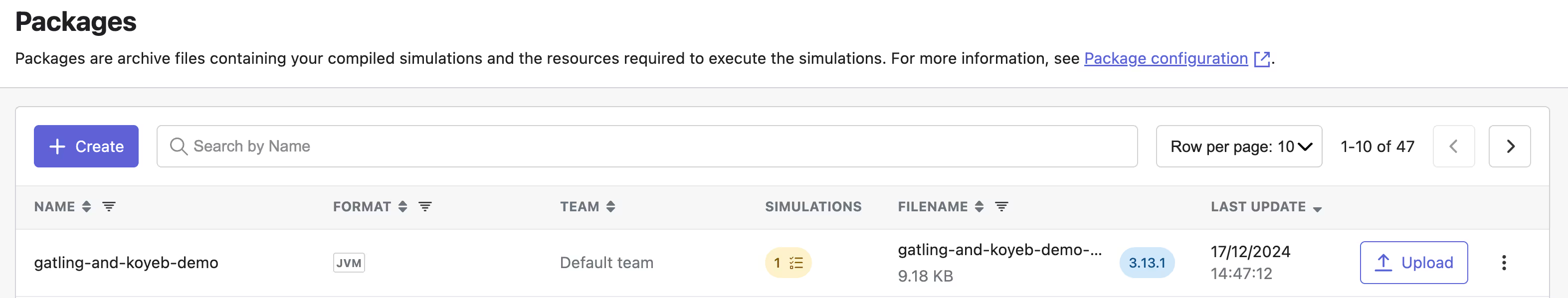

Deploy the Package

To deploy your package on Gatling Enterprise simply run these commands in the gatling folder:

Now, if you navigate to Gatling Cloud → Package, you'll see a new package named gatling-and-koyeb-demo.

Get the Simulation ID

Next, create a simulation by navigating to the "Simulations" tab and clicking "Create New." Select your package from the dropdown list, then click "Create" and configure your simulation settings.

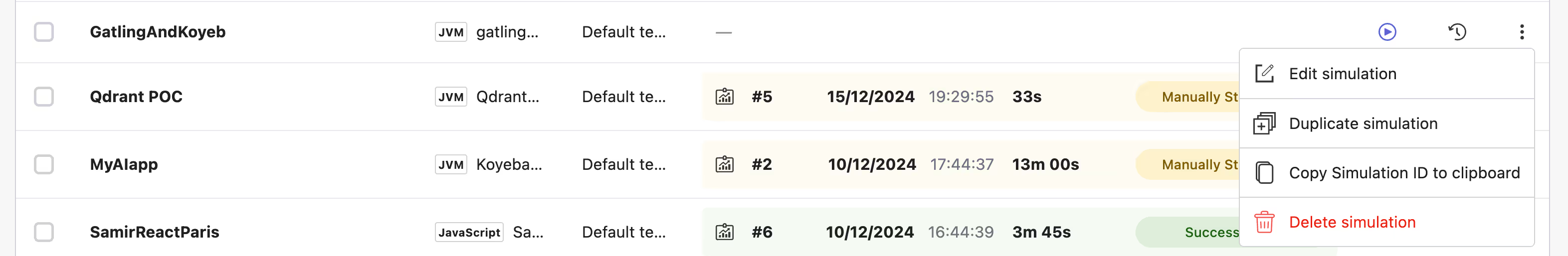

Now, if you click on the "Simulations" tab on the left, you'll see a list of simulations. Click on the three dots next to your simulation and copy the simulation ID.

Launch the simulation

Now you can launch a simulation from your CI/CD. If you want your Gatling test to run automatically with each commit, you'll need to add this to your workflow.

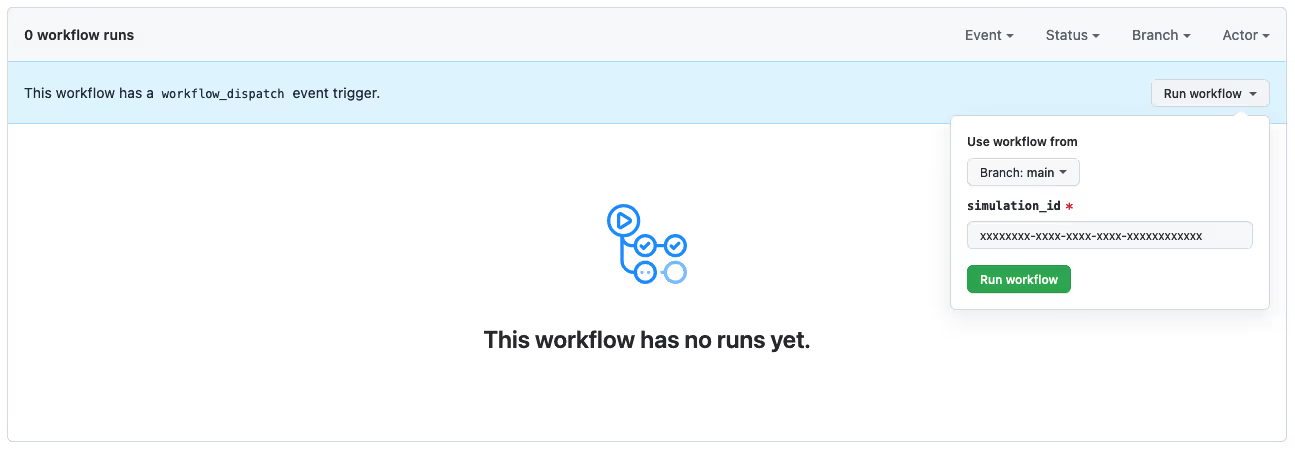

To run the simulation manually, go to your GitHub repository and click on Actions → Run Gatling Enterprise Simulation. You'll see this:

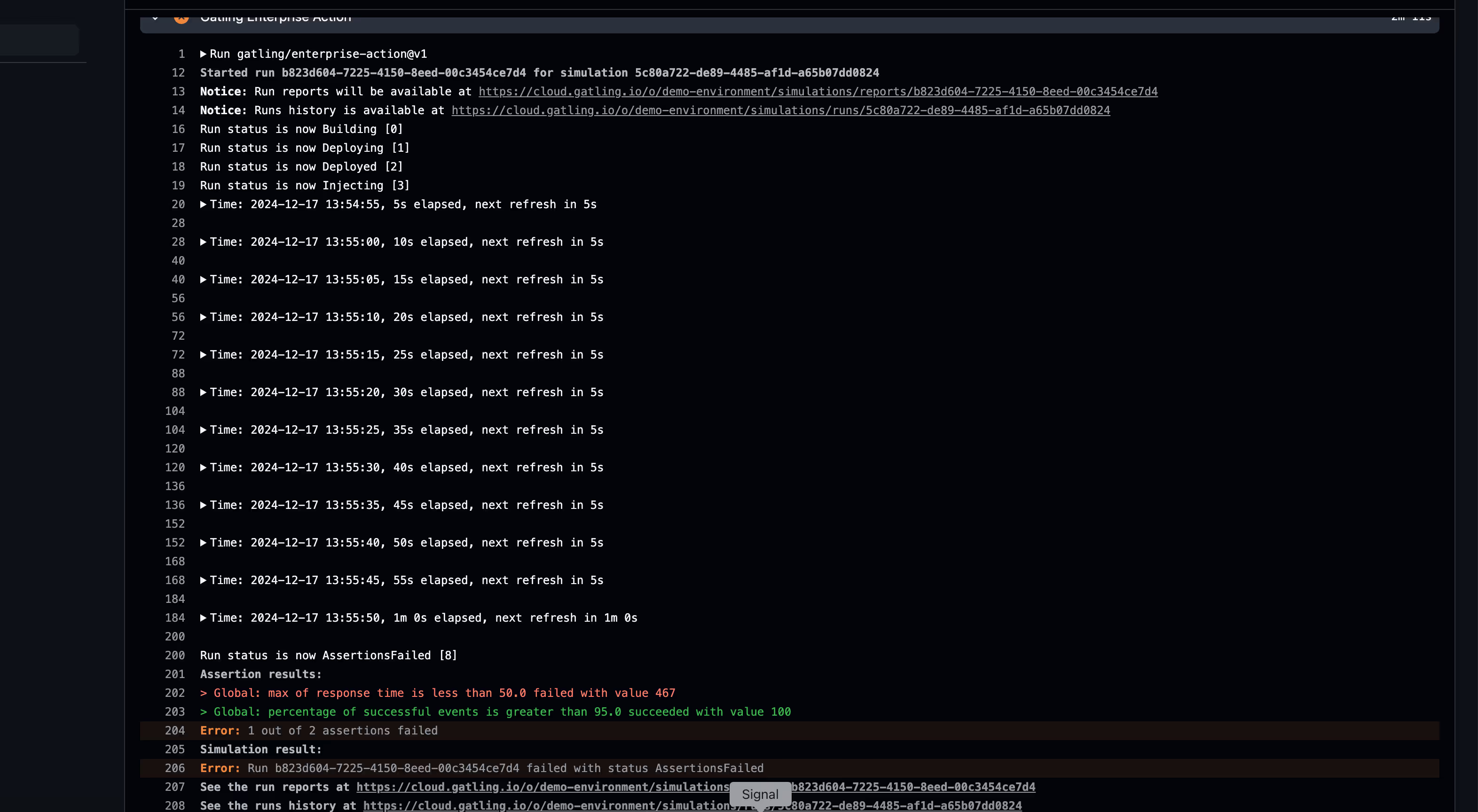

Simply paste your simulation ID and click "Run workflow." The GitHub action will trigger a new run for your simulation. You'll receive logs in your CI/CD pipeline and a detailed report on Gatling Enterprise.

You’ve streamlined your performance testing process by integrating Gatling Enterprise with GitHub Actions. This setup not only automates your load testing but also provides immediate feedback through Gatling Enterprise reports, helping you catch and address performance issues early in the development cycle.

Conclusion

Integrating Gatling and Koyeb offers a powerful toolkit for load testing AI applications. By following this guide, you can establish a robust load testing pipeline to ensure your AI applications perform well under real-world conditions. Remember to:

- Regularly update your test scenarios to reflect changes in your application

- Carefully analyze Gatling reports to identify and address performance issues

- Continuously refine your testing strategy based on insights gained from each test run

As the number of AI Applications deployed continues to grow, mastering these tools and techniques is crucial for maintaining high-performing and scalable applications.

For a more in-depth look at this topic, we recommend watching the replay of our webinar. In it, we provide a live walkthrough of this step-by-step guide. Additionally, we demonstrate how to implement effective load testing using Gatling and Koyeb.

FAQ

FAQ

Related articles

Ready to move beyond local tests?

Start building a performance strategy that scales with your business.

Need technical references and tutorials?

Minimal features, for local use only