Apache Kafka has become the backbone of modern event-driven architectures, powering everything from financial systems to real-time analytics. But as usage scales, so does the risk: can your Kafka infrastructure keep up with production traffic?

Whether you’re moving messages between microservices or streaming millions of events per second, performance bottlenecks in Kafka often surface only under load—and that’s when things break.

Kafka isn’t like your average API

Load testing Kafka is very different from testing traditional HTTP endpoints.

Kafka is distributed, asynchronous, and persistent—which makes it powerful, but also trickier to simulate at scale. You need to validate that:

- Producers can send messages consistently and efficiently

- Brokers can handle spikes in throughput without lag

- Consumers keep up with ingestion and don’t introduce latency

Most traditional load testing tools fall short. They assume a request-response model, can’t manage persistent connections, or don’t offer proper protocol-level support for Kafka.

The result? Teams rely on guesswork—or skip load testing entirely.

Better Kafka performance, fewer production incidents

Kafka is often the single point of failure in modern architectures. A small bottleneck in Kafka can cascade into:

- Delayed data processing and system-wide slowdowns

- Missed SLAs and business-critical alerts

- Over-provisioned infrastructure just to stay safe

Without proper load testing, you risk overpaying for cloud resources, under-delivering on reliability, and losing visibility into message delivery performance.

By validating Kafka pipelines with real-world traffic simulation, teams can:

- Prevent production slowdowns or outages

- Optimize partitioning, replication, and broker configurations

- Avoid unnecessary infrastructure costs by right-sizing Kafka clusters

Load Test Kafka with Gatling

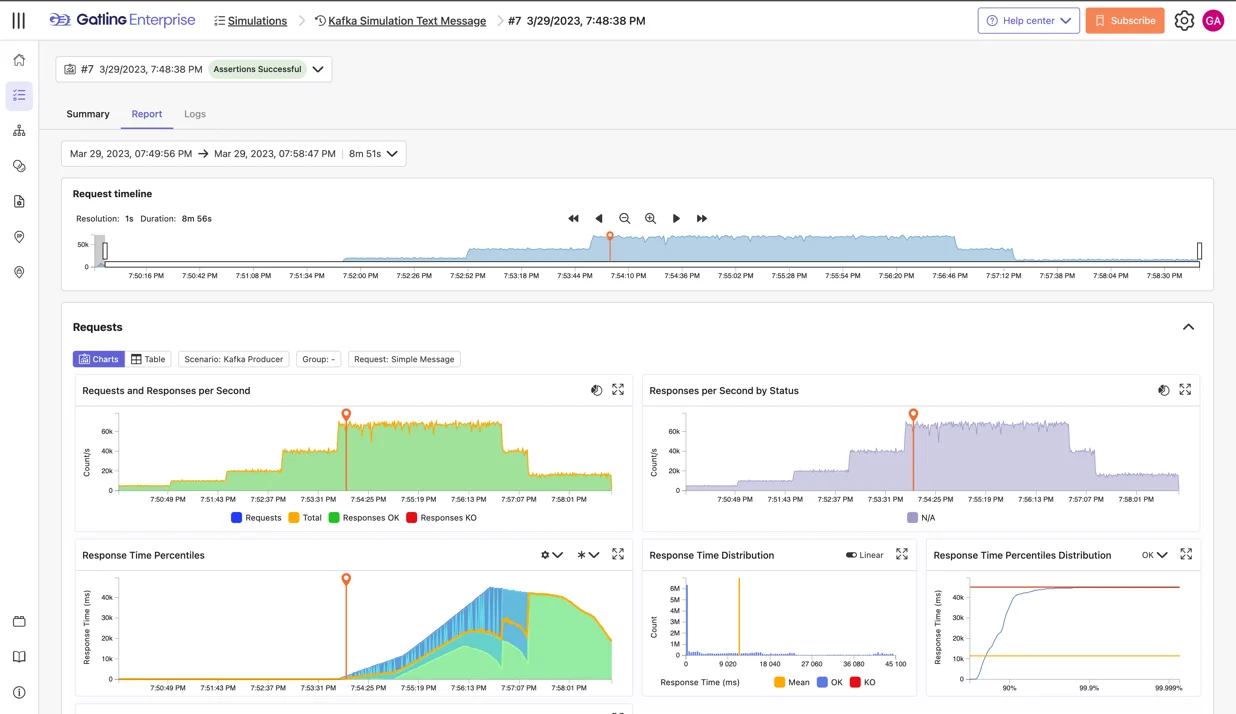

Gatling now supports Kafka protocol testing with a dedicated plugin, designed for developer-first teams that need repeatable, scriptable, and high-scale testing.

📘 Ready to simulate real-world Kafka traffic and validate your setup?

🔗 Follow our step-by-step tutorial →