Getting started with automated load testing

Last updated on

Friday

January

2026

Automating load testing: from local dev to production confidence

Software teams today move fast. Code gets written, reviewed, and deployed to production in hours, not weeks. But that speed often comes at a cost: performance regressions sneak in unnoticed.

Slow endpoints, missed SLAs, or unstable releases can all result from treating performance testing as a manual chore rather than a core part of your software testing pipeline.

Yet for many teams, performance testing still feels like a bottleneck: something that happens too late, too slowly, or not at all. Manual testing can’t keep up with modern release cycles. And traditional load testing methods—running scripts by hand, scheduling one-off tests—don’t scale.

Enter automated load testing.

By shifting performance testing left, integrating it into CI/CD, and leveraging code-defined test scenarios, engineering teams can validate scalability from the first commit to production release. This guide unpacks how Gatling helps teams of all sizes implement continuous, intelligent, and automated load testing—and why it matters more than ever.

Why automated load testing matters more than ever

This is where automated performance testing and automated load testing change the game. Instead of relying on manual testing at the end of the development cycle, teams can embed performance checks throughout the delivery process. Automated testing means running a load test isn’t a special event—it’s just another part of the build.

With the right load testing tools, teams can:

- Integrate testing into their CI/CD workflows

- Execute simulations against realistic traffic patterns

- Automatically stop tests when metrics go out of bounds

- View structured test results with full visibility into metrics and system performance

- Share insights across teams using real-time dashboards

The result is a smarter, faster feedback loop that catches performance issues before they reach users.

What automated performance testing really means

Many assume "automation" simply means running a test script via a command. But true test automation covers the entire lifecycle of a performance test, from test scenario creation to test execution, infrastructure provisioning, and alerting. Whether you're doing stress testing, spike testing, or scalability testing, automation ensures every part of your workflow is repeatable, observable, and version-controlled.

Automation means:

- Designing tests with code or no-code tools (e.g., using a recorder for web applications)

- Triggering simulations on merge, deploy, or a fixed schedule

- Provisioning infrastructure with IaC tools like Terraform or Helm

- Running distributed testing across regions or networks

- Analyzing and sharing insights programmatically or visually

Gatling Enterprise Edition supports all of this. It provides a modern performance testing tool built to automate testing from code to production.

Why teams choose Gatling for automation

Performance and load testing aren’t just QA responsibilities anymore—they’re cross-functional. Here's how different roles benefit from automated testing:

- Developers can validate changes locally or via CI and get fast feedback on application performance

- Quality engineers can define test cases, run them on a schedule, and share insights via Slack or Teams

- Performance engineers can scale infrastructure, define test scenarios with precision, and align results with SLAs

- Tech leads can set performance gates in CI/CD and standardize practices across teams

- Business leaders can use automated reports to make informed go/no-go decisions and track user satisfaction over time

With Gatling, each persona gets actionable data where they already work.

Eliminate flakiness, reduce manual testing, and scale with confidence

Manual performance testing is fragile. It’s easy to forget, misconfigure, or misinterpret. Automation testing solves this by ensuring:

- Every test is defined in code or YAML

- Test scripts run consistently across builds, branches, or releases

- Stop conditions end tests early to avoid wasted infrastructure and noisy results

- Your performance testing scenario is realistic and version-controlled

By automating the test execution process, teams reduce false alarms, missed regressions, and inconsistencies between environments.

Realistic performance testing, minus the guesswork

Effective performance testing needs to reflect real-world usage. This means simulating concurrent users, mimicking traffic bursts, and monitoring resource utilization. Gatling helps teams:

- Simulate realistic user load using HTTP, WebSockets, JMS, Kafka, and more

- Execute continuous load testing as part of everyday development

- Use event-based data to capture every interaction with no sampling

- Perform stress testing and spike testing to understand limits under heavy load

- Maintain test scenarios that match how users interact with your web application

The goal isn’t just to test—it’s to gain confidence that your app performs well under pressure.

Built for your stack, from Azure Load Testing to GitHub Actions

Modern teams don’t want another siloed tool. They want a load testing tool that integrates directly into their delivery pipelines. Gatling was built with that in mind:

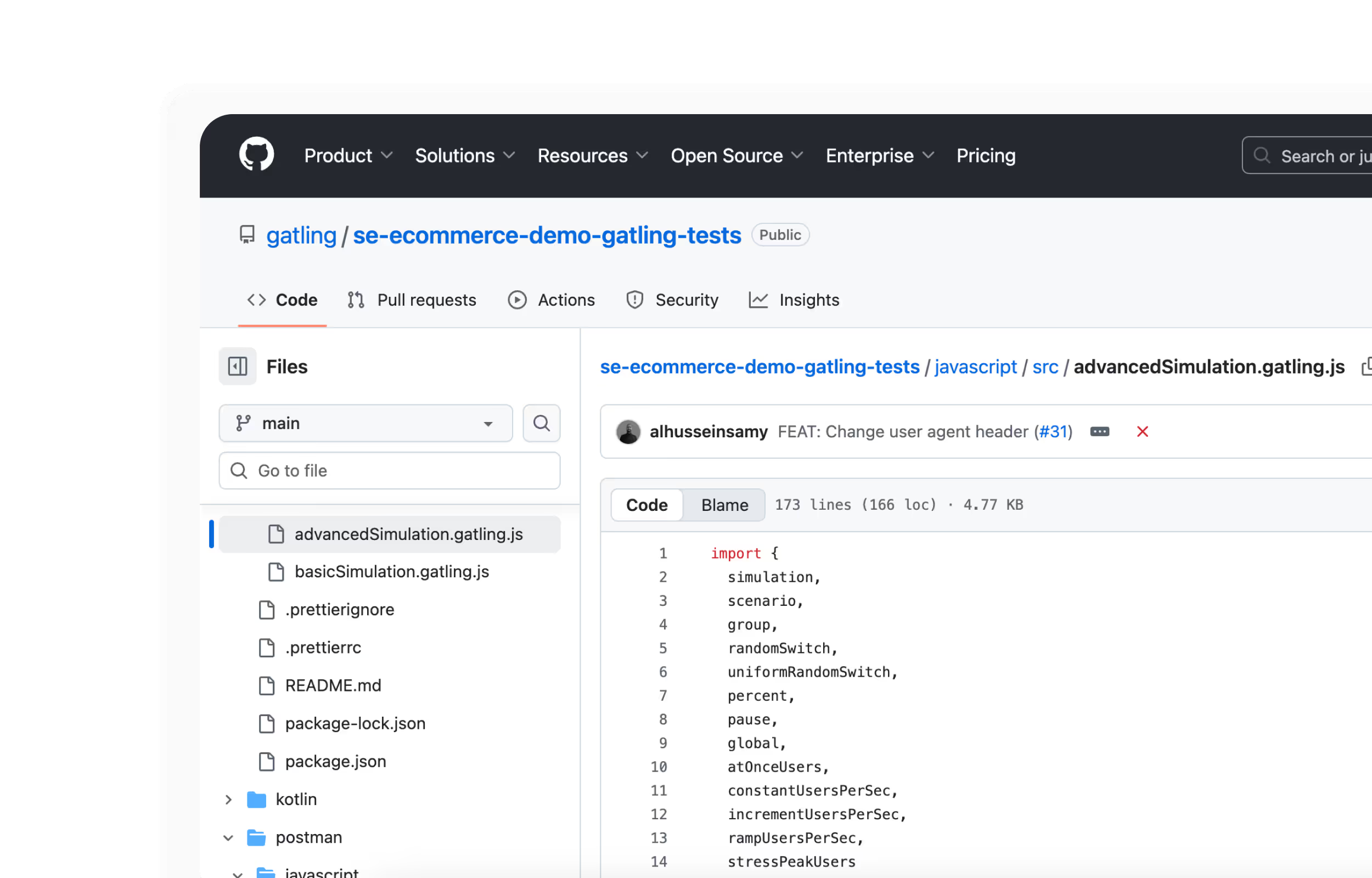

- Works with Java, Scala, Kotlin, TypeScript, and JavaScript

- Supports Maven, Gradle, npm, sbt

- Compatible with CI/CD tools like Jenkins, GitLab, Azure DevOps, and GitHub Actions

- Easily integrates with Terraform, Helm, AWS CDK, and other infrastructure-as-code tools

- Pairs with monitoring stacks via APIs or plugins for platforms like Datadog or Dynatrace

Whether you're deploying to Kubernetes, managing Azure Load Testing, or working from a local script, Gatling slots in without friction.

Visibility from test case to insight

Automation alone isn’t enough. You need to see what happened, why, and how to act on it. Gatling Enterprise Edition provides:

- Live test dashboards and status views

- Historical comparisons across branches, builds, or environments

- Metrics on DNS resolution, TCP retries, TLS handshakes, and throughput

- Open APIs to trigger alerts, tickets, or rollbacks when thresholds fail

- Shareable links for secure, no-login access to test results

From functional testing to unit testing, teams are embracing observability. Performance tests should be no different.

Deploy anywhere, simulate everything

Gatling supports multiple deployment modes so you can test from where your users are:

- Public cloud: Gatling-managed AWS regions for instant scale

- Private cloud: Deploy inside your AWS, Azure, or GCP environments

- On-premises: For teams with tight controls over data and infrastructure

- Hybrid: Combine public and private locations to reflect true usage patterns

Whether you’re testing a public-facing API or an internal app behind strict firewalls, Gatling has a solution.

Start small, grow fast

You don’t need to master everything at once. Many teams begin by recording a simple user flow, converting it to code, and committing it. Then they:

- Trigger the test in CI/CD

- Set simple performance thresholds (e.g., 95th percentile < 500ms)

- Add infrastructure automation

- Expand coverage to include API testing, web application flows, or internal services

The more you use it, the more value automation delivers. It’s a compounding benefit.

Real-world load testing patterns and pitfalls

Industry trends show growing automation: By 2023, over 74% of enterprises had added automated load testing to their delivery pipelines. Teams that integrate performance testing in CI/CD detect around 76% of bottlenecks pre-release, compared to just 31% when relying on manual testing. Yet only about 30% of teams automate performance tests at all—revealing both adoption momentum and opportunity.

Testing patterns in practice: Mature teams run performance tests daily or per build. Others rely on on-demand runs before release. Peak traffic or 2x peak are common stress levels. While most tests occur in staging, some teams conduct safe production testing with test accounts and real data—especially in microservice architectures or distributed systems.

Frequent bottlenecks: Database latency, thread pool exhaustion, message queue backlogs, CPU saturation, and API rate limits commonly surface during automated tests. Tools like Gatling help surface these problems early, before they degrade production experience.

Common mistakes to avoid:

- Relying on averages instead of percentiles (masking long-tail slowness)

- Only testing late in the cycle or post-release

- Skipping realistic think time or data

- Running tests in unrepresentative environments

- Failing to analyze results across multiple metrics

- Treating load testing as a one-off rather than an ongoing discipline

Say goodbye to flaky test scripts and hello to confidence

Automated load testing isn’t just for large enterprises. It’s for any team that cares about:

- Releasing without fear

- Knowing their application won’t break under traffic

- Aligning testing with engineering workflows

- Making performance testing repeatable, cost-effective, and continuous

With the rise of continuous testing, continuous performance testing, and DevOps practices, having the right testing tool in your pipeline is no longer optional.

Summary: Let automation do the heavy lifting

Manual testing may still have its place, but for scalable, reliable performance coverage, automation is essential. With Gatling, teams can:

- Run automated performance tests across every build and release

- Use load testing tools that match their stack and scale

- Test real-world scenarios with concurrent users and realistic loads

- Catch bottlenecks before users do

- Visualize and share results with zero overhead

Whether you’re evaluating performance testing tools, exploring test automation, or looking to modernize your software testing stack, Gatling Enterprise Edition provides a fast, flexible, and developer-friendly approach to performance assurance.

Want to experience it yourself? Request a demo or try Gatling Enterprise Edition for free and discover how you can automate load testing from code to production—without sacrificing control or coverage

{{card}}

FAQ

FAQ

It’s the process of simulating traffic through scripts and CI/CD triggers, catching performance issues early without manual steps.

It helps catch bottlenecks before release and blocks bad builds automatically, ensuring performance stays reliable.

Gatling is code-first, integrates with your stack, and uses high-res event metrics—ideal for CI/CD and modern pipelines.

Automate load testing by scripting realistic user flows, running mostly HTTP-level tests, and integrating them into CI. Use ramps, think time, and varied data. Define SLOs (p95 latency, error rate), add abort thresholds, and collect infra + app metrics so failures are explainable, not just visible.

Related articles

Ready to move beyond local tests?

Start building a performance strategy that scales with your business.

Need technical references and tutorials?

Minimal features, for local use only