HTTP/2 features that can improve your application performance

.avif)

Last updated on

Monday

September

2025

HTTP/2 features that can improve your application performance

If you use a web browser like Chrome or Firefox, you will see “http://” or “https://” at the beginning of a website address. The HTTP protocol is the most common communication technique used on the internet. It allows your browser to exchange information with web servers, making nearly everything you do on a website possible, including adding items to a digital shopping cart or browsing social media. For HTTP (or any protocol) to work, there must be a set of commonly understood communication rules, similar to grammar rules you learn when studying a language.

HTTP was first developed in 1989 and has evolved to meet the changing communications needs over the last 35 years. There are 3 major releases in use today:

- HTTP/1.1

- HTTP/2

- HTTP/3.

This blog post explores the differences between HTTP/2 and HTTP/1.1 and how these differences affect load-testing strategies.

If HTTP/3 is available, why are we still talking about HTTP/2 and HTTP/1.1?

HTTP/2 and HTTP/1.1 still make up the majority of HTTP usage, around 70% of websites and applications. Both protocols will be around for many more years and are still relevant to new application development.

In the constantly changing world of web technologies, HTTP/2 was a revolutionary protocol promising significant performance, efficiency, and user experience improvements. In this article, we explore the key features of HTTP/2 compared to HTTP/1.1.

If you are interested in load testing and HTTP/3, post a request in the Gatling community forum, and we will try to cover the topic.

New and Enhanced HTTP/2 features

This article covers the significant features added to HTTP/2 and what they offer to developers to enhance user experience or optimize application performance. The features are:

- Multiplexing and concurrent streams

- Binary protocol and efficiency

- Header compression and reduced overhead

- Server push and proactive resource delivery

- Stream prioritization and dependency

Multiplexing and Concurrent Streams

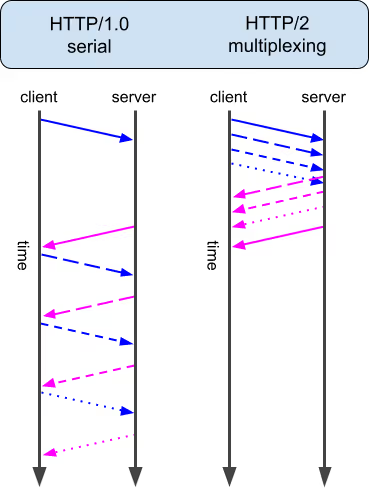

Multiplexing is the HTTP/2 feature that offers the noticeable performance improvement compared to HTTP/1.1. HTTP/2 enables connection multiplexing, allowing multiple streams (requests and responses) to be sent and received over a single TCP connection. This means that your web browser only needs to open a single connection, and then it can send and receive batches of requests and responses.

In contrast, HTTP/1.1 sends a single request and waits for a response before sending the second request. In modern web applications, that often means requesting the site HTML, CSS, JavaScript, and static resources in succession. To circumvent this limitation, HTTP/1.1 usually opens multiple TCP connections for parallel requests, which can increase resource usage and create potential performance bottlenecks.

The following illustration shows how HTTP/1.1 requests and responses are sequential, while HTTP/2 can send and receive multiple requests in parallel. This allows HTTP/2 to significantly reduce the total request-response time for applications that need to load multiple resources.

Image courtesy of Accreditly

Binary Protocol and Efficiency

HTTP/2 adopts a binary protocol, whereas HTTP/1.1 relies on a text-based protocol. This shift to a binary format improves parsing and transmission efficiency, leading to reduced overhead and faster data transfer. While HTTP/1.1's text-based protocol is human-readable, HTTP/2's binary format enhances machine processing and contributes to better performance.

Header Compression and Reduced Overhead

Another notable feature of HTTP/2 is header compression, which minimizes redundant header information during communication. HTTP/1.1 sends headers in plain text format, leading to larger data transfer and increased latency. HTTP/2 improves performance by eliminating duplicated header fields to reduce the amount of transmitted data.

Server Push and Proactive Resource Delivery

HTTP/2 introduced server push, allowing the server to proactively push resources to the client before they are requested. Server push in HTTP/2 optimized page load times by anticipating and delivering essential resources upfront, enhancing the user experience.

HTTP/1.1, in contrast, relies on the client to request all resources. This means additional round trips for acquiring the necessary data and increased latency. Server push seems like a great idea, but it didn’t live up to the hype. Google deprecated the feature from the Chrome browser. The server push feature failed because of poor adoption and better alternatives in HTTP/3.

Stream Prioritization and Dependency

HTTP/2 provides stream prioritization and dependency mechanisms, allowing more critical resources to be prioritized for quicker delivery. HTTP/1.1 has no built-in features for explicit resource prioritization, resulting in non-optimized resource allocation and potentially slower page load times. Stream prioritization in HTTP/2 enhances the efficiency of resource delivery, especially in complex web applications.

Summary

HTTP/2 is a significant advancement in web communication protocols, offering many features and optimizations compared to HTTP/1.1, such as multiplexing, a binary protocol, and header compression. HTTP/2 introduces several advancements that enhance performance, efficiency, and user experience. By embracing HTTP/2, developers can produce better modern web applications. As a result, your teams can deliver faster, more responsive, and scalable experiences to users worldwide.

FAQ

FAQ

Related articles

Ready to move beyond local tests?

Start building a performance strategy that scales with your business.

Need technical references and tutorials?

Minimal features, for local use only